Multiplayer WebXR Readyplayer.me Avatars — Part 1

Overview of technical concepts for multiplayer WebXR readyplayer.me avatars

Table of contents

Intro

Real-time multiplayer WebXR experiences is an area I am very excited about.

I don’t think its an area that has been fully utilised or explored to its potential.

In my previous series of posts about this topic, I talked through the concepts, practicalities of real time user interactions and steps to enabling 3d object interactions.

In this series of blog posts, I’m going to expand on the enhancement of user interactions through the use of free-to-use readyplayer.me avatars.

This first blog post will focus on the concepts, the following blog posts will explain more about the practical steps.

GIF of avatar enhanced interactions

Overview

In this section, I’ll explain the core principles of how to build a real-time multiplayer WebXR experience that uses readyplayer.me avatars!

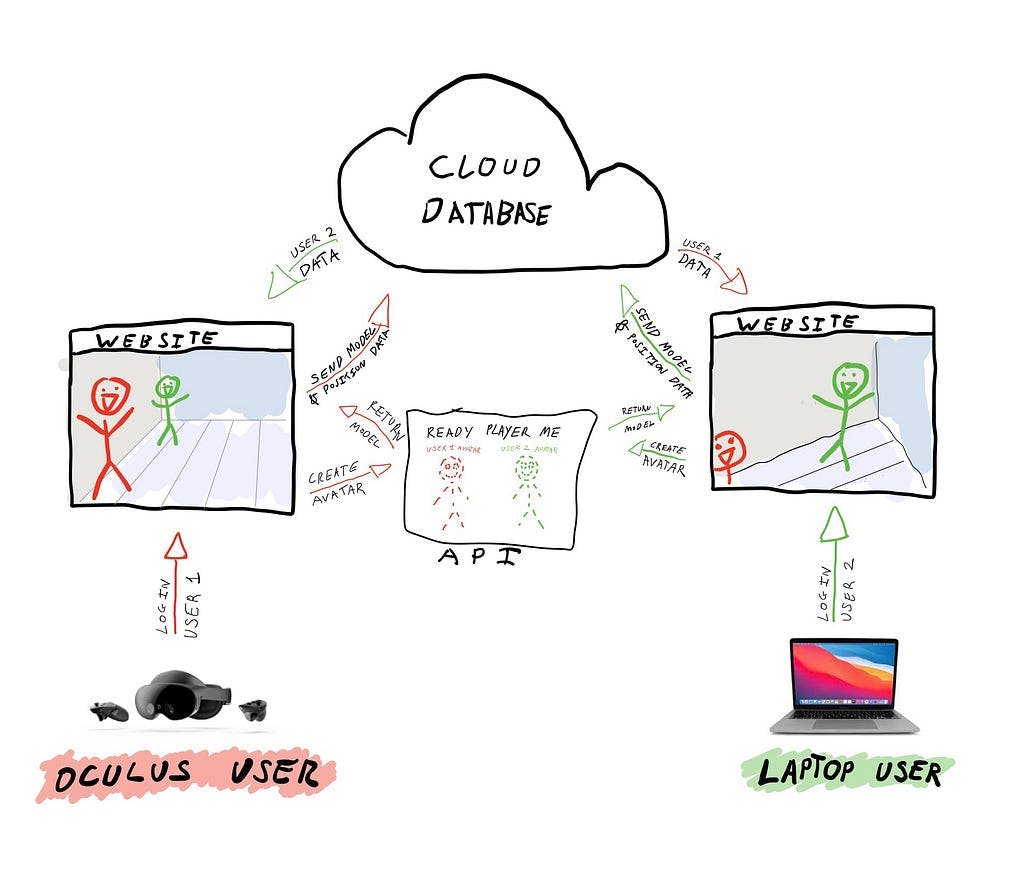

The Concept

This example works by creating a web application that renders on different devices (e.g an Oculus Quest Pro or a Macbook Pro), then getting those users to log into the website from those devices.

When those users log in they then have the option to create an avatar using Ready Player Me, which will return a 3D model that is stored in the cloud — along with the positional data which is all shared between users.

As a very broad overview:

A user visits the website on their device and logs in using their credentials, the site will render as appropriate for their device (thanks to the WebXR API)

Users can choose to select a model using Ready Player Me, which is then stored in the cloud.

This 3d model is then visualised on their screen, as well as other users avatars — which is enabled by the cloud connecting these devices together.

The Tech Stack

As this was originally written for Wrapper.js, it adheres to the use of Terraform, Serverless Framework and Next.js.

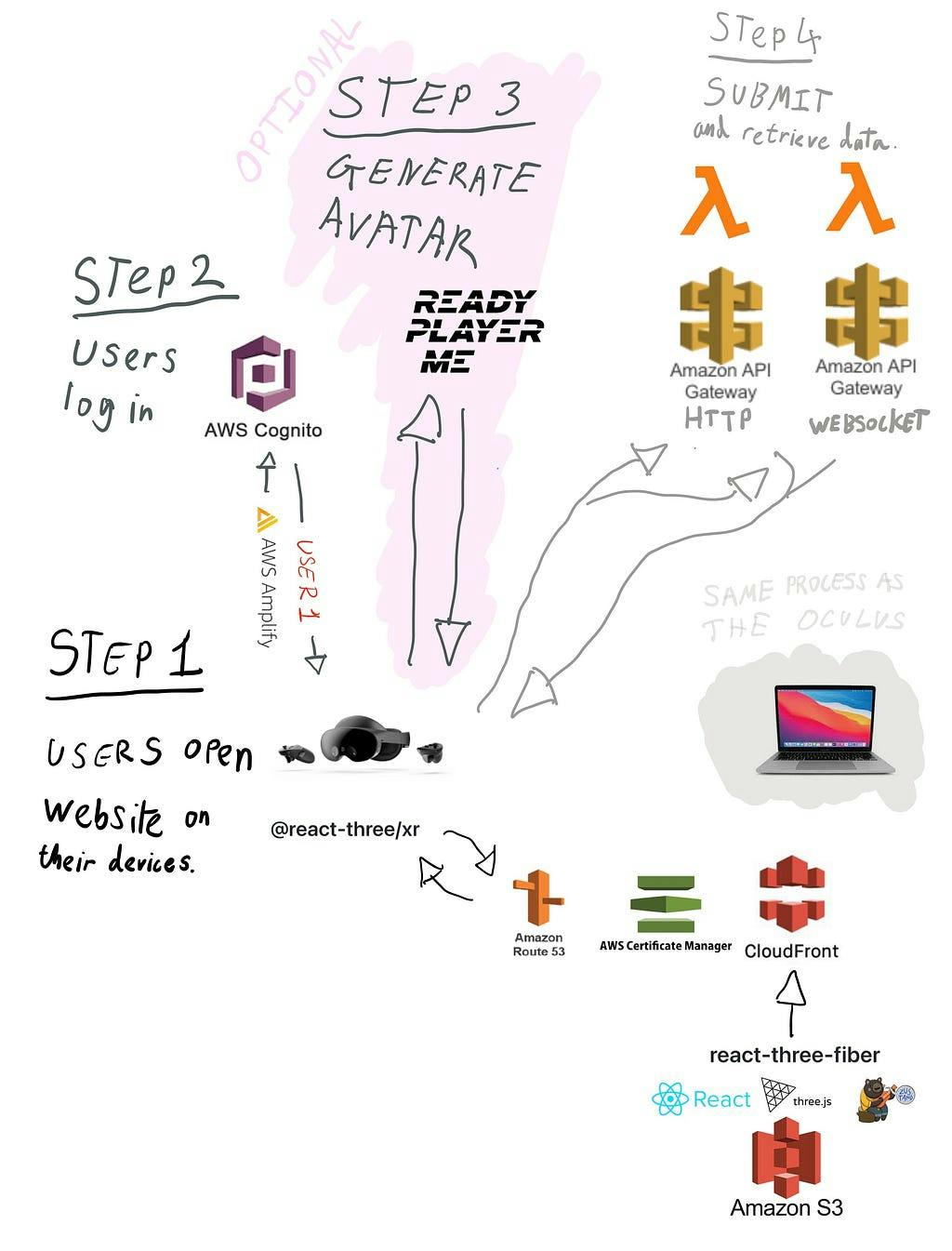

The below diagram details this further.

I use Terraform to create all cloud resources except for Lambda functions, which are created and managed by Serverless Framework.

All environment variables required to interact with Amazon Cognito are passed to the React.js Front End created using Next.js.

The Front End also requests the 3D model from Ready Player Me, which is then rendered along with the rest of the 3D environment with React Three Fiber and Three.js.

The App Flow

If you were to map out when these technologies get used within this example, it would look something like the below diagram.

If you start at the bottom of the diagram, you can see that all the Front End files are exported statically and hosted on the S3 Bucket.

These files are then distributed with the Cloudfront CDN, assigned with an SSL certificate by AWS Certificate Manager and are provided with a custom domain name with Amazon Route 53.

At this point, Step 1 begins:

The user opens the website on their device (e.g the Oculus Quest Pro) by visiting the domain name

At this point React-Three-XR renders the website based on the device’s capability (in this case a mixed reality headset)

Next up is Step 2:

The user logs into their AWS Cognito account, with the use of the AWS Amplify library on the Front End

Having logged in, the website now has a unique identifier for the person that is using that device

Once the user has successfully logged in and uniquely identified themselves, this sets them up nicely for Step 3:

The user has the option to open a model, that allows them to configure and create a 3D model

If the user then selects this model at the end of the configuration process, a URL is returned back to the Front End that contains the .GLB files to render the avatar

Its at this point that Step 4 begins:

If an Avatar was selected, this is saved to DynamoDB, other users can then pull this avatar into their environments to be rendered.

The user then emits their x/y/z co-ordinates in real-time to the websocket API gateway which then stores these in DynamoDB, other users can then see these positions and update their Front Ends in real time

Conclusion

This has been a long blog post, I hope it has been helpful in showing you how real-time multiplayer WebXR experiences that use readyplayer.me avatars work!

In the next post, I will details the practical sides of the actual code that enables all of this.

In the meantime, hope you enjoyed this post and have fun :D

Multiplayer WebXR Readyplayer.me Avatars — Part 1 was originally published in JavaScript in Plain English on Medium, where people are continuing the conversation by highlighting and responding to this story.